About

Advances in artificial intelligence and deep learning, especially in the fields of computer vision and generative models, have made manipulating images and video footage in a photo-realistic manner increasingly accessible, lowering the skill ceiling and the time investment needed to produce visually convincing tampered (fake) footage or imagery. This has resulted in the rise of so-called deepfakes, machine-learning methods specifically designed to produce fake footage en masse.

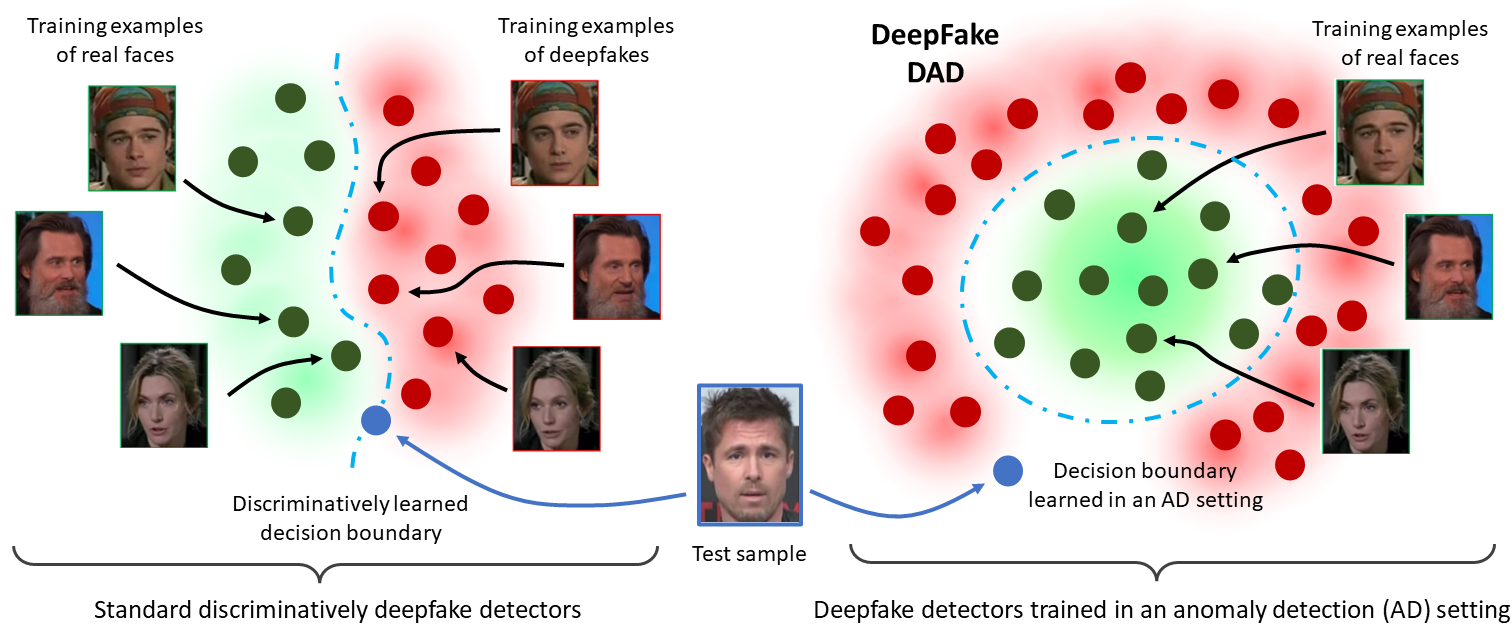

To prevent such illicit activities enabled by deepfake technologies, it is paramount to have highly automated and reliable means of detecting deepfakes at one’s disposal. Such detection technology not only enables efficient (large-scale) screening of image and video content but also allows non-experts to identify whether a given video or image is real or manipulated. Within the research project Deepfake detection using anomaly detection methods (DeepFake DAD) we aim to address this issue and conduct research on fundamentally novel methods for deepfake detection that address the deficiencies of current solutions in this problem domain. Existing deepfake detectors rely on (semi-)handcrafted features that have been shown to work against a predefined set of publicly available/known deepfake generation methods. However, detection techniques developed in this manner are vulnerable (i.e., unable to detect) to unknown or unseen (future) deepfake generation methods. The goal of DeepFake DAD is, therefore, to develop detection models that can be trained in a semi-supervised or unsupervised manner without relying on training samples from publicly available deepfake generation techniques, i.e., within so-called anomaly detection frameworks trainable in a one-class learning regime.

The expected main tangible result of the research project are highly robust deepfake detection solutions that outperform the current state-of-the-art in terms of generalization capabilities and can assist end-users and platform providers to automatically detect tampered imagery and video, allowing them to act accordingly and avoid the negative personal, societal, economic, and political implications of widespread, undetectable fake footage.

DeepFake DAD (ARIS: J2-50065) is a fundamental research project funded by the Slovenian Research and Innovation Agency (ARIS) in the period: 1.10.2023 – 30.9.2026 (1,51 FTE per year).

The Principal Investigator (PI) of DeepFake DAD is Prof. Vitomir Štruc, PhD.

Link to SICRIS: Follow Me.

Project overview

DeepFake DAD is structured into 6 work packages:

- WP1: Coordination and project management

- WP2: Supervised models and data representations

- WP3: From supervised to semi-supervised models

- WP4: Unsupervised DeepFake detection

- WP5: Demonstration and exploitation

- WP6: Dissemination

The R&D work on these work packages is expected to result in:

- The development of discriminative data representations

- Semi-supervised deepfake detectors

- Unsupervised and one-class detection models.

Project phases

- Year 1: Activities on work packages WP1, WP2, WP6

- Year 2: Activities on work packages WP1, WP2, WP3, WP4, WP6

- Year 3: Activities on work packages WP1, WP3, WP4, WP5, WP6

Partners

DeepFake DAD is conducted jointly by:

- The Laboratory for Machine Intelligence (LMI), Faculty of Electrical Engineering, University of Ljubljana

- The Computer Vision Laboratory (CVL), Faculty of Computer and Information Science, University of Ljubljana

- Alpineon Ltd.

Participating researchers

- Prof. Vitomir Štruc, PhD – Project Leader (PI)

- Assoc. Prof. Janez Perš, PhD, Researcher LMI

- Ass. Janez Križaj, PhD, Resercher LMI

- Ass. Klemen Grm, Researcher LMI

- Ass. Marija Ivanovska, Researcher LMI

- Žiga Babnik, Researcher LMI

- Prof. Peter Peer, PhD, PI at CVL

- Ass. Blaž Meden, PhD, Researcher CVL

- Darian Tomašević, Researcher CVL

- Ass. Matej Vitek, Researcher at CVL and LMI

- Jerneja Žganec Gros, PhD, PI at Alpineon

International Advisory Committee

- Assoc. Prof. Walter Scheirer, PhD, University of Notre Dame, USA

- Assoc. Prof. Terence Sim, PhD, National University of Singapore

- Assoc. Prof. Vishal Patel, John Hopkins University

Project publications

Journal Publications

- Marija Ivanovska; Leon Todorov; Peter Peer; Vitomir Štruc: SelfMAD++: Self-Supervised Foundation Model with Local Feature Enhancement for Generalized Morphing Attack Detection, Information Fusion (SCI IF = 15.5), vol. 127, Part C, no. 103921, pp. 1-16, 2026 [PDF]

- Borut Batagelj; Andrej Kronovšek; Vitomir Štruc; Peter Peer: Robust cross-dataset deepfake detection with multitask self-supervised learning, ICT Express (SCI IF = 4.1), pp. 1-5, 2025 [PDF].

- Marija Ivanovska; Vitomir Štruc, Y-GAN: Learning Dual Data Representations for Anomaly Detection in Images, Expert Systems with Applications (SCI IF = 8.5), 2024 [PDF].

Conference Publications

- Marija Ivanovska; Leon Todorov; Naser Damer; Deepak Kumar Jain; Peter Peer and Vitomir Štruc, SelfMAD: Enhancing Generalization and Robustness in Morphing Attack Detection via Self-Supervised Learning, In: IEEE International Conference on Automatic Face and Gesture Recognition 2025, pp. 1-10, 2025 [PDF]

- Elahe Soltandoost; Richard Plesh; Stephanie Schuckers; Peter Peer; Vitomir Struc: Extracting Local Information from Global Representations for Interpretable Deepfake Detection, In: Proceedings of IEEE/CFV Winter Conference on Applications in Computer Vision – Workshops (WACV-W) 2025, pp. 1-11, Tucson, USA, 2025 [PDF]

- Leon Alessio; Marko Brodarič; Peter Peer; Vitomir Struc; Borut Batagelj: Prepoznava zamenjave obraza na slikah osebnih dokumentov, In: Proceedings of ERK 2024, pp. 1-4, Portorož, Slovenia, 2024 [PDF]

- Lovro Sikošek; Marko Brodarič; Peter Peer; Vitomir Struc; Borut Batagelj: Detection of Presentation Attacks with 3D Masks Using Deep Learning, In: Proceedings of ERK 2024, pp. 1-4, 2024. [PDF]

- Krištof Ocvirk; Marko Brodarič; Peter Peer; Vitomir Struc; Borut Batagelj: Primerjava metod za zaznavanje napadov ponovnega zajema, In: Proceedings of ERK, pp. 1-4, 2024 [PDF]

- Marko Brodarič; Peter Peer; Vitomir Struc: Towards Improving Backbones for Deepfake Detection, Proceedings of ERK 2024, pp. 1-4, 2024.

- Luka Dragar, Peter Rot, Peter Peer, Vitomir Štruc, Borut Batagelj, W-TDL: Window-Based Temporal Deepfake Localization, Proceedings of the 2nd International Workshop on Multimodal and Responsible Affective Computing (MRAC ’24), October 28-November 1 2024, Melbourne, VIC, Australia. Proceedings of the 32nd ACM International Conference on Multimedia (MM’24), October 28-November 1, 2024, Melbourne, Australia. ACM, New York, NY, USA, 6 pages [PDF]

- Peter Rot, Philipp Terhorst, Peter Peer, Vitomir Štruc, ASPECD: Adaptable Soft-Biometric Privacy-Enhancement Using Centroid Decoding for Face Verification, Proceedings of the IEEE International Conference on Automatic Face and Gesture Recognition (FG), 2024 [PDF]

- Marija Ivanovska, Vitomir Štruc, On the Vulnerability of Deepfake Detectors to Attacks Generated by Denoising Diffusion Models, Proceedings of WACV Workshops, pp. 1051-1060, 2024 [PDF]

- Marko Brodarič; Peter Peer; Vitomir Štruc, Cross-Dataset Deepfake Detection: Evaluating the Generalization Capabilities of Modern DeepFake Detectors, In: Proceedings of the 27th Computer Vision Winter Workshop (CVWW), pp. 1-10, 2024 [PDF].

Bachelor Theses

- Tadej Logar, Odkrivanje globokih ponaredkov z video transformerji, Supervisor(s): Peter Peer, Borut Batagelj, 2024 [PDF]

- Tilen Ožbot, Odkrivanje umetno ustvarjenih slik, Supervisor(s): Peter Peer, Borut Batagelj, 2024 [PDF]

Master Theses

- Jernej Sabadin, Generiranje sintetičnih obrazov z difuzijskimi modeli, Supervisors(s): Vitomir Štruc, Darian Tomažić [PDF]

- Nikola Maric, Morphing attack detection using multimodal large language models, Supervisor(s): Vitomir Štruc, Marija Ivanovska [PDF]

- Andrej Kronovšek, Prepoznavanje globokih ponaredkov z enorazrednim učenjem, Supervisor(s): Peter Peer, Borut Batagelj, 2024 [PDF]

- Anže Mur, Odkrivanje globokih ponaredkov z analizo spremenjenih in nespremenjenih območij, Supervisor(s): Peter Peer, Borut Batagelj, 2024 [PDF]

- Vid Križnar, Detekcija artefaktov generativnih nasprotniških mrež kot pomoč pri detekciji globokih ponaredkov, Supervisor(s): Peter Peer, Borut Batagelj, 2024 [PDF]

Media Coverage

- Vitomir Štruc, Ponarejeni video (še) ni popoln, Od bita do bita (podcast), [Link]

- Štruc Vitomir, Ko videoposnetek ni več dokaz: “Preverite drugje, preden ukrepate“, MMC [Link]

- Štruc Vitomir, Ah, ta splet: DeepFake, RTV SLO [Link]

- Štruc Vitomir, Ali ste vedeli, da slovenski raziskovalci razvijajo tehnologijo za bolj učinkovito zaznavanje globokih ponaredkov?, Ne/Ja, STA [link]

- Štruc Vitomir, Slovenski raziskovalci razvijajo tehnologijo za bolj učinkovito zaznavanje globokih ponaredkov, STA [link]

- Žiga Emeršič, Kaj je res in kaj je laž?, Media coverage as part of Tednik, RTV SLO [Link]

Invited Talks

- Vitomir Štruc, DeepFake: Kaj to je in zakaj je pomembno!, Invited lecture for Koroško društvo Akademija Znanosti in Umetnosti (KSAK), November 2025

- Vitomir Štruc, Face Manipulation Detection: From DeepFakes to Face Morphing Attacks, Workshop on Artificial Intelligence for Multimedia Forensics and Disinformation Detection – held in conjunction with WACV 2025, February 2025

- Borut Batagelj, From biometrics to deepfake detection, Invited lecture at the IILM College of Engineering & Technology, IILM University, Greater Noida, Uttar Pradesh, India, October 2024

- Vitomir Štruc, DeepFake: Kaj to je in zakaj je pomembno!, Invited lecture for the Lions Club Slovenj Gradec, November 2024

- Vitomir Štruc, Prepoznamo ponarejene video posnetke?, Skeptiki v Pubu, Ljubljana, November 2024

- Vitomir Štruc, AI as a challenge for scientific journalists, Konferenca znanstvenih novinarjev Balkana, Center Rog, Ljubljana, September 2024

- Peter Peer, Deepfakes Detection Generalization, Lecture at FERIT, Fakultet elektrotehnike, računarstva i informacijskih tehnologija Osijek, July, 2024

- Vitomir Štruc, DeepFakes: From Generation to Detection, Keynote talk at YBERC 2024, June 2024

- Vitomir Štruc, DeepFake: Kaj to je in zakaj je pomembno!, Invited lecture for the Lions Club Koper, June 2024

- Vitomir Štruc, Kratka zgodovina umetne inteligence, Invited lecture at the Simfonija Elektrotehnike+ event, May 2024

- Peter Peer, Biometrics recognition and generation, invited presentation at the School of Electronics Engineering, Kyungpook National University, Daegu, Republic of Korea, May 2024

- Peter Peer, DeepFakes – a menace to society, invited presentation at the Electronics and Telecommunications Research Institute, Daejeon, Korea, May 2024

Events organized

- 2nd International Workshop on Synthetic Realities and Data in Biometric Analysis and Security (in the scope of WACV 2026); Organizers: Fadi Boutros, Eduarda Caldeira, Laura Cassani Naser Damer, Marija Ivanovska, Vishal Patel, Ajita Rattani, Anderson Rocha, Matthew Stamm, Vitomir Štruc [Webpage]

- 2nd International Workshop on Synthetic Realities and Biometric Security: Advances in Forensic Analysis and Threat Mitigation (in the scope of BMVC 2025); Organizers: Naser Damer, Marija Ivanovska, Vishal Patel, Ajita Rattani, Anderson Rocha, Vitomir Štruc [Webpage]

- 2nd International Workshop on Synthetic Data for Face and Gesture Analysis (SD-FGA) (in the scope od FG 2025); Organizers: Vitomir Struc; Xilin Chen; Fadi Boutros; Naser Damer; Deepak Jain [Webpage]

- International Workshop on Synthetic Realities and Data in Biometric Analysis and Security (in the scope of WACV 2025); Organizers: Fadi Boutros, Naser Damer, Marija Ivanovska, Vishal Patel, Ajita Rattani, Anderson Rocha, Vitomir Štruc [Webpage]

- 1st International Workshop on Synthetic Realities and Biometric Security: Advances in Forensic Analysis and Threat Mitigation (in the scope of BMVC 2024); Organizers: Naser Damer, Marija Ivanovska, Vishal Patel, Ajita Rattani, Anderson Rocha, Vitomir Štruc [Webpage]

- Special Session on “Recent Advances in Detecting Manipulation Attacks on Biometric Systems” (in the scope of IEEE IJCB 2024); Organizers: Abhijit Das, Naser Damer, Marija Ivanovska, Raghavendra Ramachandra, Vitomir Struc [Webpage]

- Special Session on “Generative AI for Futuristic Biometrics” (in the scope of IEEE IJCB 2024); Organizers: Sudipta Banerjee, Nasir Memon, Kiran Raja, Vitomir Struc [Webpage]

- 1st International Workshop on Synthetic Data for Face and Gesture Analysis 2024 (in the scope of IEEE FG 2024); Organizers: Fadi Boutrus, Naser Damer, Deepak Kumar Jain, Pourya Shamsolmoali, Vitomir Štruc [Webpage]

Special Issues organized

- IEEE TBIOM Special Issue on: “Generative AI and Large Vision-Language Models for Biometrics”. [PDF]

Deliverables

Some of the project deliverables represent reports, which (in the first stage) are made publicly available to the funding agency only. The deliverables will be used as the basis for the project publications and will be released to the general public after publication in a peer reviewed venue. The deliverables can be accessed from the DeepFakeDAD Deliverable page.

Funding agency